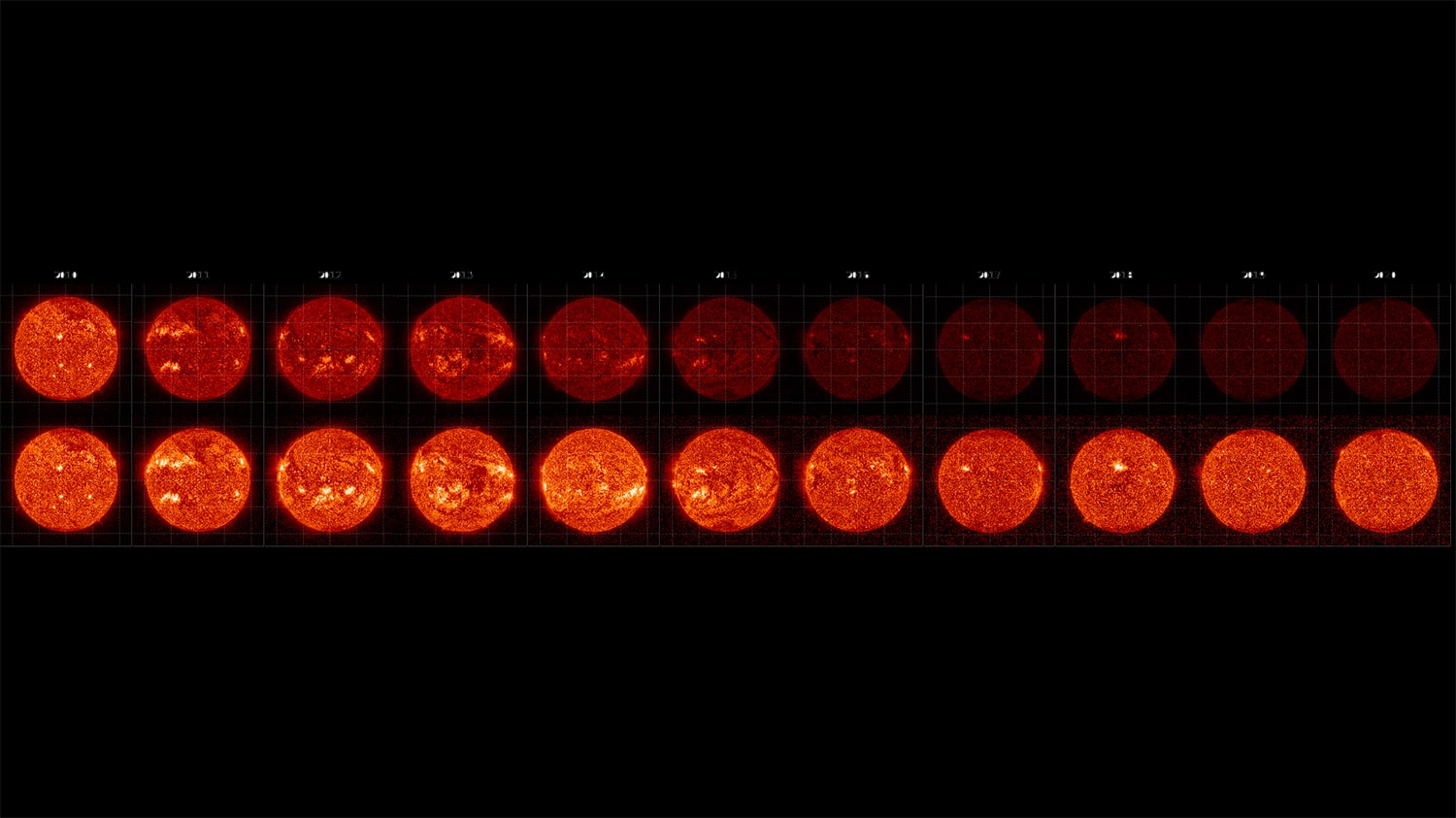

Since it launched on February 11, 2010, NASA’s Solar Dynamics Observatory, or SDO, has provided high-definition images of the Sun for over a decade. The images have provided a detailed look at various solar phenomena.

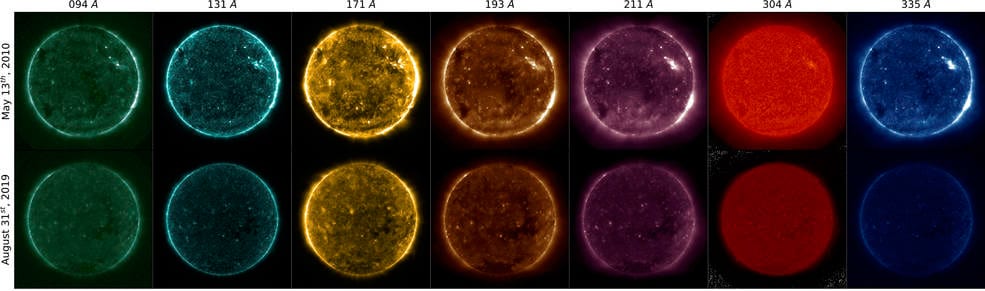

SDO uses Atmospheric Imaging Assembly (AIA) to continuously look at the sun, taking images in 10 wavelengths every 10 seconds. It creates a wealth of information about our Sun never previously possible. Due to constant staring, AIA degrades over time, and the data needs to be frequently calibrated.

Now, scientists are taking the help of AI to calibrate some of NASA’s images of the Sun. This will improve the data that scientists use for solar research.

The AI uses an algorithm to learn how to perform its task.

Scientists started with training the machine learning algorithm to recognize solar structures and compare them using AIA data. They did this by giving the algorithm images from sounding rocket calibration flights and tell it the correct amount of calibration they need.

After enough of these examples, they give the algorithm similar images and see if it would identify the correct calibration needed. With enough data, the algorithm learns to determine how much calibration is required for each image.

Because AIA looks at the Sun in multiple wavelengths of light, scientists can also use the algorithm to compare specific structures across the wavelengths and strengthen its assessments.

To start, they would teach the algorithm what a solar flare looked like by showing it solar flares across all of AIA’s wavelengths until it recognized solar flares in all different types of light. Once the program can identify a solar flare without any degradation, the algorithm can then determine how much degradation affects AIA’s current images and how much calibration is needed for each.

Dos Santos said, “This was the big thing. Instead of just identifying it on the same wavelength, we’re identifying structures across the wavelengths.”

With this new cycle, specialists are ready to continually calibrate AIA’s pictures between calibration rocket flights, improving the precision of SDO’s data for analysts.

Continue reading Calibrating NASA’s images of the Sun using AI on Tech Explorist.

0 comments:

Post a Comment